If a page isn’t in Google’s index, there’s 0% chance that it will receive organic traffic.

Indexation, in an over simplified nutshell, is step 2 in Google’s ranking process:

- Crawling

- Indexing

- Ranking

This article will focus on how to get Googlebot to index more pages on your site, faster.

How to check if your pages are indexed by Google

The first step is understanding what your website’s indexation rate is.

Indexation rate = # of pages in Google’s index / # of pages on your site

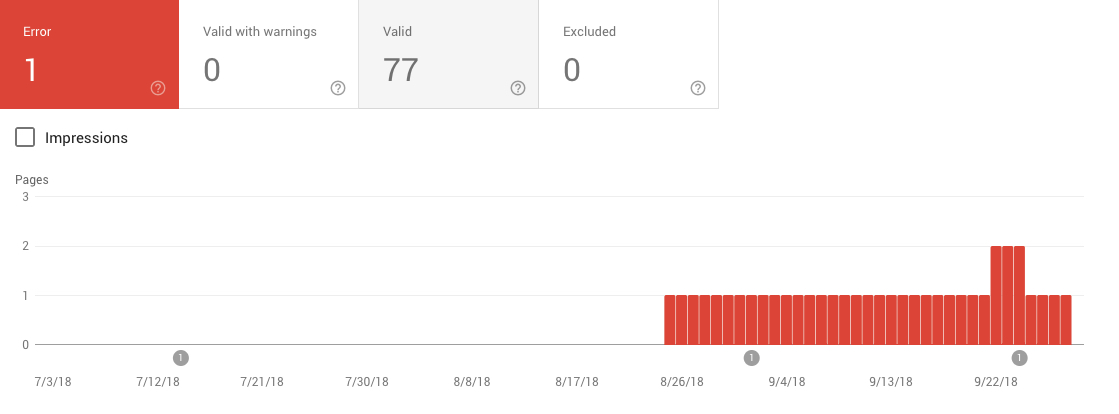

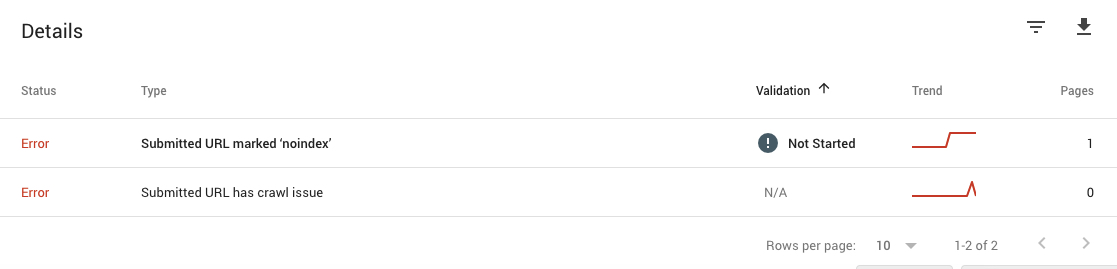

You can review how many pages your website has indexed in Google Search Console’s “Index Coverage Status Report“.

If you see errors or a large number of pages outside of the index:

- Your sitemap might have URLs that are non-indexable (i.e. pages set to NOINDEX, blocked via robots.txt or require user login)

- Your site might have a large number ‘low quality’ or duplicate pages that Google deems unworthy

- Your site might not have enough ‘authority’ to justify all the pages

You can dig into the specifics in the table underneath (this is an awesome new feature in Google’s updated Search Console).

How to get pages on your site indexed

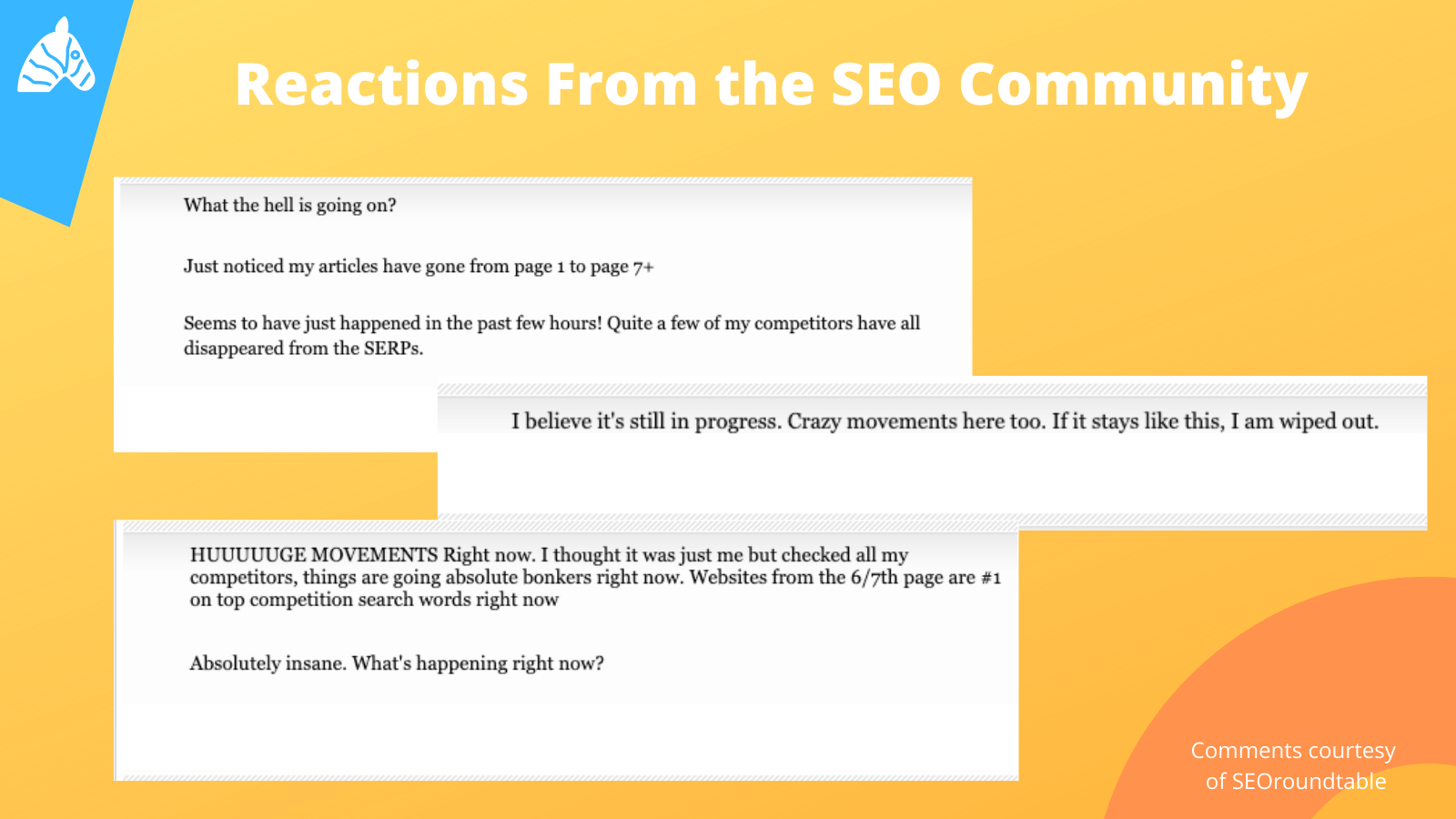

I hate to be cliche, but you really need to deliver the right experience to get Google’s attention. If your site doesn’t meet Google’s guidelines in regards to trust, authority and quality, these tips will likely not work for you.

With that being said, you can use these tactics to improve your site’s indexation rate.

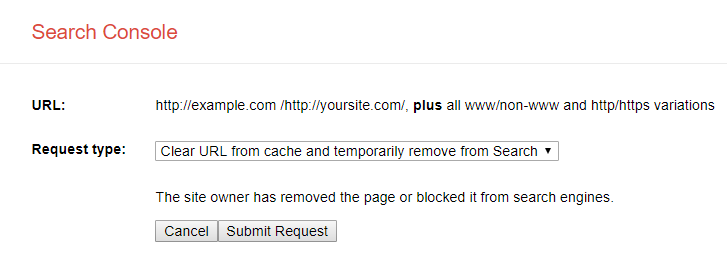

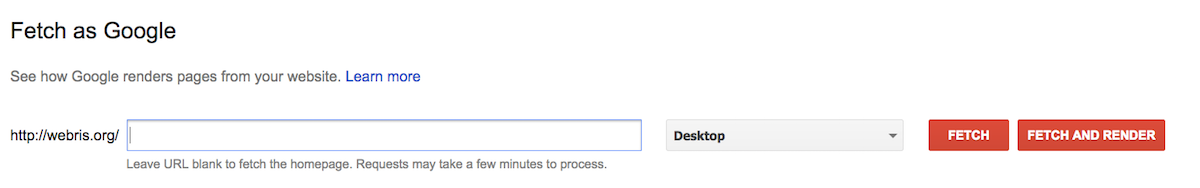

1. Use Fetch As Google

Google Search Console has a feature allowing you to input a URL for Google to “Fetch”. After submission, Googlebot will visit your page and index.

Here’s how to do it…

- Log into Google Search Console

- Navigate to Crawl Fetch as Google

- Take the URL you’d like indexed and paste it into the search bar

- Click the Fetch button

- After Google had found the URL, click Submit to Index

Assuming the page is indexable, it will be picked up within a few hours.

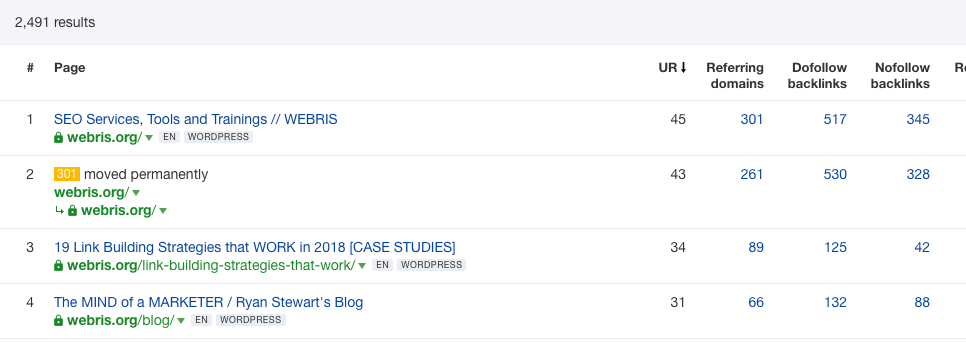

2. Use internal links

Search engines crawl from page to page through HTML links.

We can use authority pages on your site to push equity to others. I like to use Ahrefs “best pages by links” report.

This report tells me the most authoritative pages on my site – I can simply add an internal link from here to a page that needs equity.

It’s important to note, the 2 interlinking pages need to be relevant – it’s not a good idea to link unrelated pages together.

Read my guide about internal linking silos

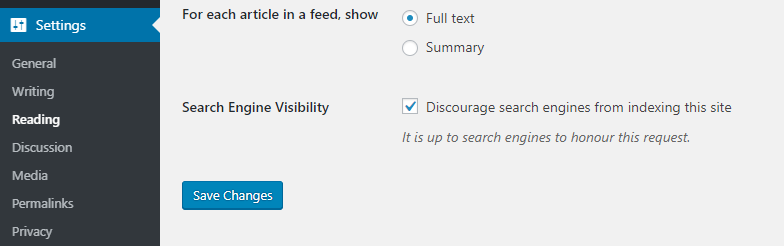

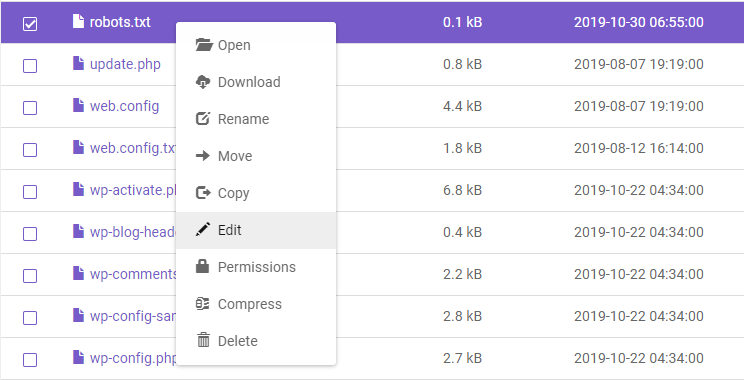

3. Block low quality pages from Google’s index

While content is a cornerstone of a high quality website, the wrong content can be your demise. Too many low quality pages can decrease the number of times Google crawls, indexes and ranks your site..

For that reason, we want to periodically “prune” our website’s by removing the garbage pages

Pages that serve no value should be:

- Set to NOINDEX. When the page still has value to your audience, but not search engines (think thank you pages, paid landing pages, etc).

- Blocked via crawl through Robots.txt file. When an entire set of pages has value to your audience, but not search engines (think archives, press releases).

- 301 redirected. When the page has no value to your audience or search engines, but has existing traffic or links (think old blog posts with links).

- Deleted (404). When the page has no value to your audience or search engines, and has no existing traffic or links.

We’ve built a content audit tool to help you with this process.

4. Include the page in your sitemap

Your sitemap is a guide to help search engines understand which pages on your site are important.

Having a page in your sitemap does NOT guarantee indexation, but having failing to include important pages will decrease indexation.

If your site is running on WordPress, it’s incredibly easy to setup and submit a sitemap using a plugin (I like Yoast).

Read more about how to build a sitemap

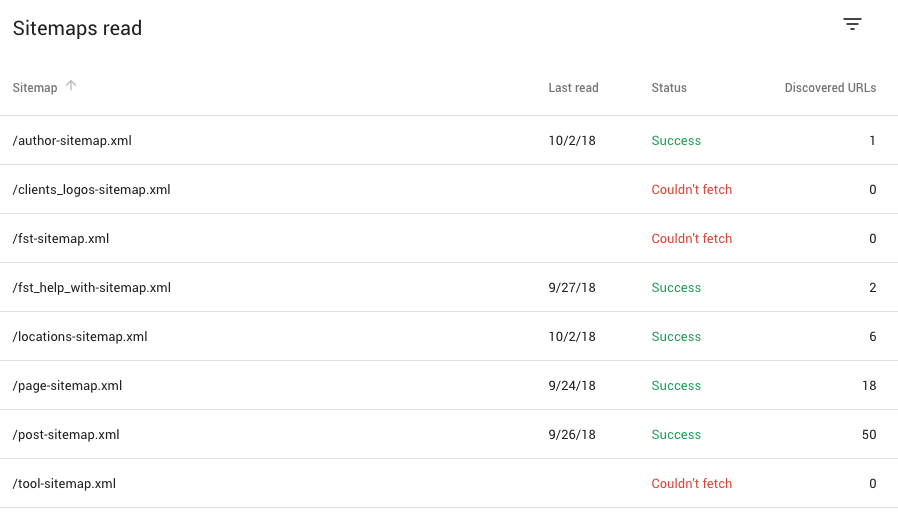

Once your sitemap is built and submit is GSC, you can review in the Sitemaps report.

Double check to make sure all pages you want indexed are included. Triple check to make sure all pages you DON’T want indexed are NOT included.

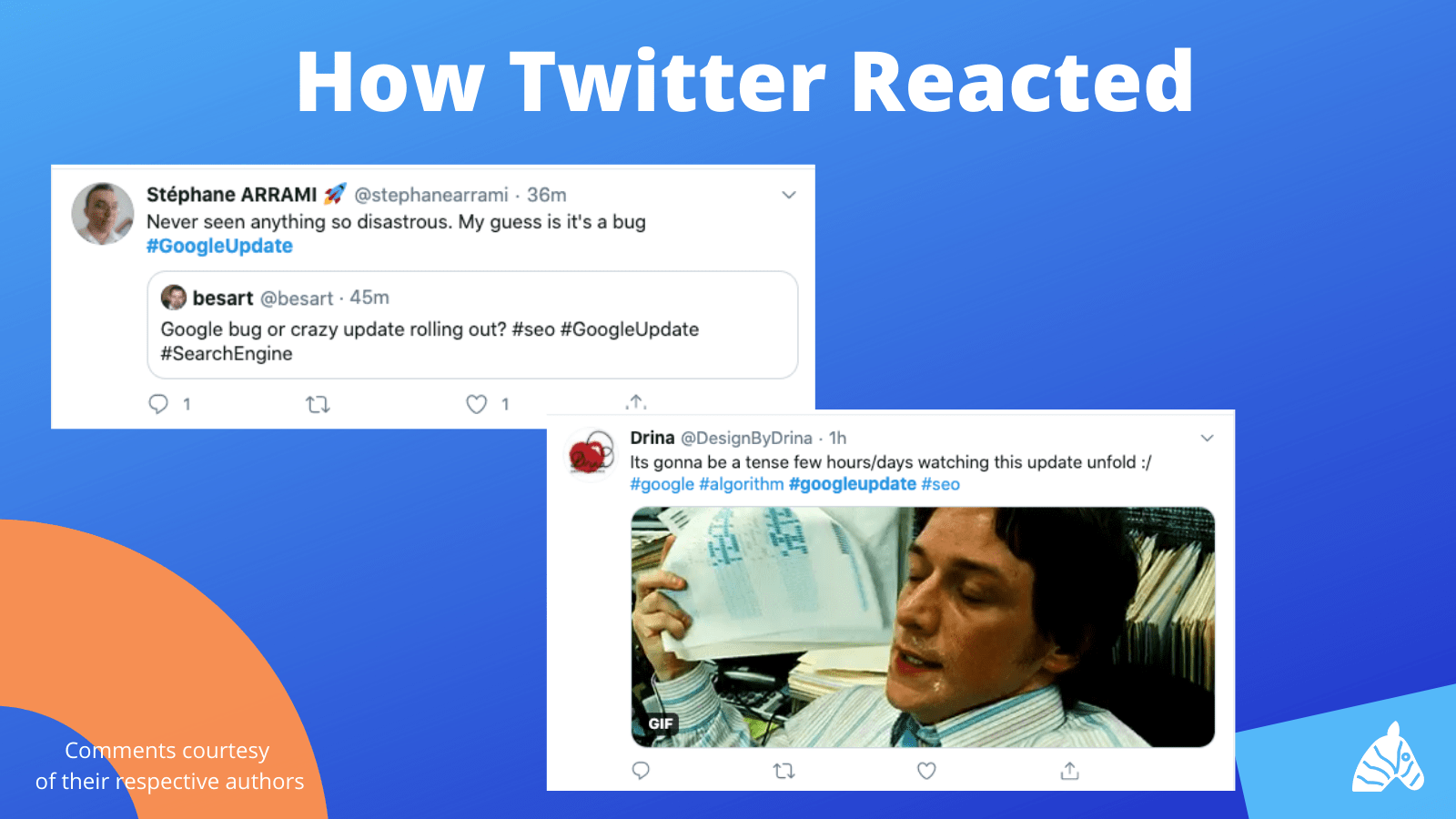

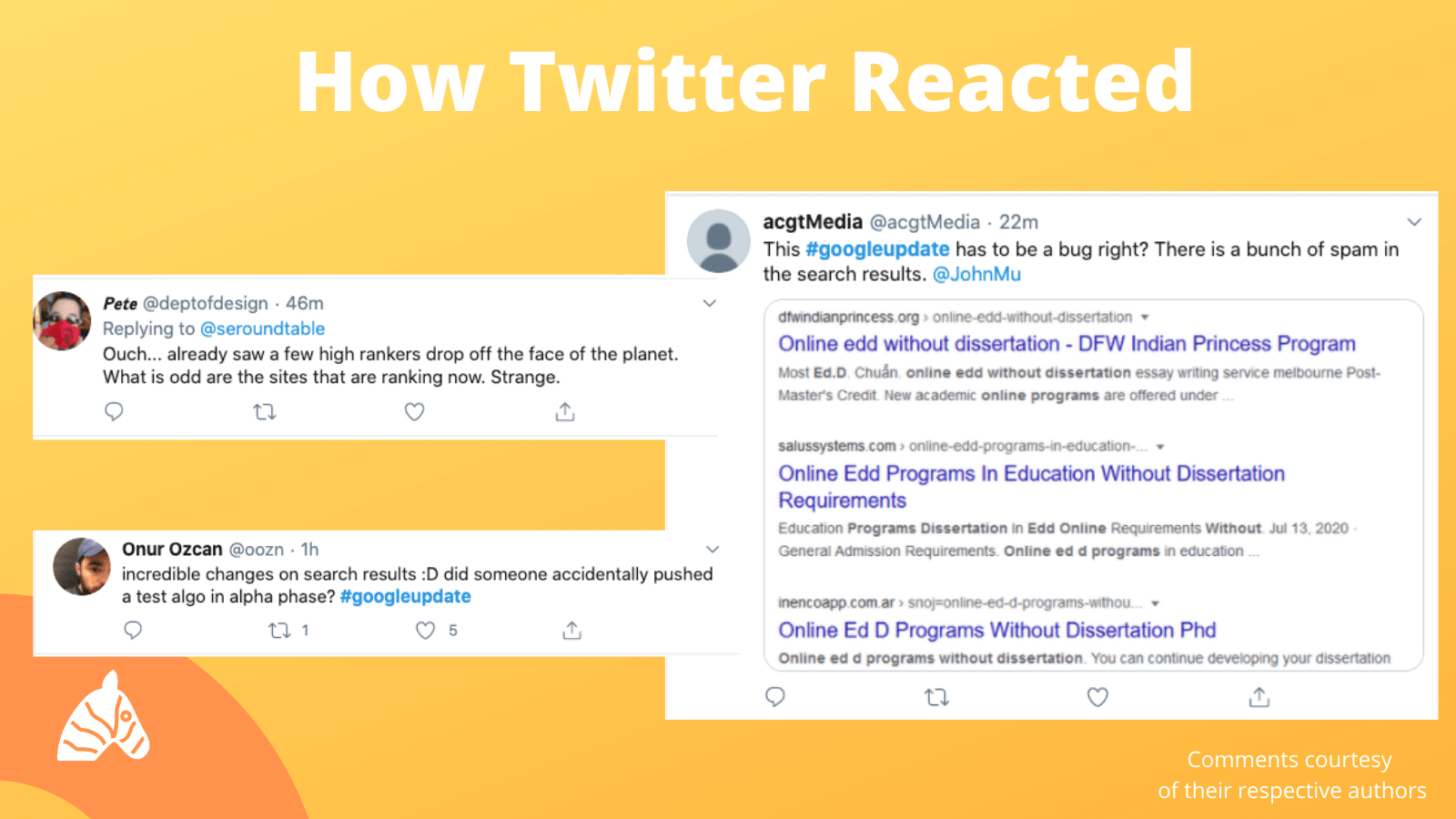

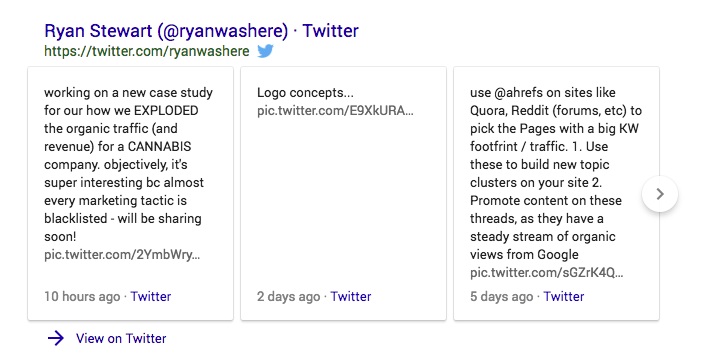

5. Share the page on Twitter

Twitter is a powerful network that Google crawls regularly (they index Tweets, too).

It’s a no brainer to share your content on social media, but it’s also an easy way to give Google a nudge.

6. Share the page on high traffic sites

Sites like Reddit and Quora are popular sites that allow you to drop links. I make it a regular practice to promote recently published pages on Quora – it helps with indexation, but also can drive a ton of traffic.

If you’re feeling lazy (and grey hat), you can buy “social signals” on sites like Fiver.

7. Secure external links to the page

As previously mentioned, Google crawls from page to page through HTML links.

Getting other websites to link to yours is not only a huge ranking factor, but a great way to pick up the indexation of your website.

The easiest ways to get links:

- Guest post on a relevant, authoritative website

- Find relevant bloggers or media sites and reach out with an advertising request

This is grossly over simplified – you can check out my top link building tactics for more ideas.

8. “Ping” your website

Sites like Ping-O-Matic that send “pings” to search engines to notify them that your blog has been updated.

Honestly, it’s not the greatest method – but it’s fast, free and easy to use.

Source: https://webris.org/google-index/